More than a month after GDPR came in to force it is still a hot topic. Many people have discovered that their own simple hobby blogs fall within the scope of GDPR compliance and have struggled to understand exactly what they need to do to remain on the right side of the law.

There are plenty of blog posts and guidance sites out there now offering advice on banners, privacy statements, and what it means to be a data controller, which will help the typical blogger navigate this minefield.

But there is one area which even for tech-savvy bloggers is still problematic - that of the rule that no cookies other than a basic non-data based session cookie can be written without the express prior consent of a site visitor from a GDPR country. Blogspot which host many of my sites have been fairly good about its own control of writing of cookies (though the navbar in some templates still occasionally leak cookies while the cookies banner is on show for some reason??). What they don't cover - simply because it's really not their problem - is control of cookies being written by any third party gadgets that bloggers add to their sites eg: adverts, twitter feeds, social media tools and affiliate product linking.

I suggest at this point all blog owners pay a visit to

https://www.cookiemetrix.com/ and type in the url of their blog to see if cookies being written and general GDPR compliance is an met. Checking you site via proxy sites is NOT a safe way of determining this as numerous tests have shown that what is served by proxies can be very different to a direct connection due to their own internal processes. Use a dedicated free GDPR test site like CookieMetrix.

If third party cookies are an issue - read on!

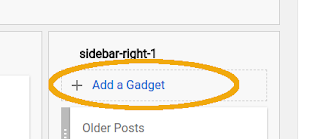

OK.... first a bit of background. How do bloggers normally add a third party script or widget to their Blogspot site? Well, they could roll up their sleeves and directly edit the HTML/XML code for their templates, but the vast majority will add a Blogspot HTML/Javascript Gadget:

Easy enough... but provides no control over what that script then goes on to do as far as cookie control goes.

What my solution does is to use the same process, but instead create a placeholder for where these scripts will go. This allows us to defer loading those scripts until we have checked if the visitor needs to give cookie consent, and if so that they have.

So how does this work? Well, most people with a little background in HTML/CSS/Javascript will realise that you can create a <div> tag, give it a unique ID and modify it later. it is the basis of pretty much all dynamic page content. See

https://www.w3schools.com/js/js_htmldom_elements.asp for examples etc

The problem is that while you can retrospectively change the style, the text content and most HTML tags within an element, any scripts you try to add either by innerHTML or appending child nodes will not be executed because the page load has finished and also as a security measure to prevent possible cross-site scripting exploits.... Bugger!

To solve this we need to use some clever asynchronous reading and writing of the script to the page which allows the scripts to still be parsed after the page has finished loading.

I spent quite a bit of time looking at ways of doing this, and in fact for several of my other sites found a solution that works well with most third party includes... but some third party scripts still proved problematic - ones using document.write.

Having gone round in circles for some time I re-discovered in my notes on useful libs Postscribe

https://krux.github.io/postscribe/ which actually solves this problem very simply. Yay!

A few quick tests confirmed that it was possible to use postscribe and a little jQuery to retrospectively add executable scripts long after the page load has finished....

And that forms the basis of the system I now use.

So, lets first describe how to use my system to add third party scripts that are deferred until cookie compliance agreement is met, and the I will break down the code to explain how it works.

First, thing you need to do is pop over to

https://ipdata.co/ and click on the "Get a free API key" button - because we will be using their API to work out if the visitor is from a GDPR country. You will be emailed a key which you need to include in the code that follows. A free API key gets you 1500 free location checks a day. (You can sign up to a paid solution if your site has more traffic, or you could look at using cookies to save the visitor country code once cookies are agreed - to limit the number of API calls needed - but that is beyond the scope of this blog post.)

Next, we are going to be using both jQuery and Postscribe libs, so you need to add them to the <head> of your site. To do this open up the theme and click on edit HTML

... And then paste :

<script src='https://ajax.googleapis.com/ajax/libs/jquery/3.3.1/jquery.min.js'/>

<script src='https://cdnjs.cloudflare.com/ajax/libs/postscribe/2.0.8/postscribe.min.js'/>

immediately after the opening <head> tag.

Then click on Save Theme to finish. This means that when your page loads, those two javascript libraries are going to be available for our code which will be further down the page.

So far so good.

Now, rather than adding the third party code direct to a Blogspot gadget as you would normally, we use the placeholder div giving it a unique id eg: myPlaceHolder1 (you can add as many as you like, but they all need a unique id). using:

<div id="myPlaceHolder1"></div>

Next, create a HTML / Javascript gadget as close to the bottom of the page as you can :

and paste in the following code:

<script>

function showDivs(){

// this is the bit you need to edit =============================================================================================

// (see blog post for details)

// add amazon search widget

$(function() {

postscribe('#myPlaceHolder1','<script type="text/javascript">amzn_assoc_ad_type ="responsive_search_widget";.... etc .... <\/script>');

});

// add twitter widget

$(function() {

postscribe('#myPlaceHolder2','<a class="twitter-timeline" data-width="300" data-height="600" data-theme="dark" .... etc .... <\/script>');

});

//etc...

}

// array of country codes where GDPR cookie agreement is needed (source : https://www.hipaajournal.com/what-countries-are-affected-by-the-gdpr/)

// if other countries needing a cookie accept add the country code to this array

var reqGDPR = ["AT","BE","BG","HR","CY","CZ","DK","EE","FI","FR","DE","GR","HU","IE","IT","LV","LT","LU","MT","NL","PL","PT","RO","SK","SI","ES","SE","GB"];

//functions and globals

// set up a interval timer for five second intervals - this will repeatedly call the function pollCookieAgree that checks for the agreement cookie

// being written which shows that the visitor has agreed to the gdpr cookies terms or it is cancelled due to this

// not being a visitor from a gdpr country

var ourInterval = setInterval("pollCookieAgree()", 5000);

//general purpose cookie reading function

function readCookie(name) {

var nameEQ = name + "=";

var ca = document.cookie.split(';');

for (var i = 0; i < ca.length; i++) {

var c = ca[i];

while (c.charAt(0) == ' ') c = c.substring(1, c.length);

if (c.indexOf(nameEQ) == 0) return c.substring(nameEQ.length, c.length);

}

return null;

}

// interval based listener function - called until cancelled by ourInterval further up the script

function pollCookieAgree(){

// call the readCookie script and grab its returned value

var myCookie = readCookie("displayCookieNotice");

if (myCookie==="y"){

// yes - we have found the cookie that shows cookies have been agreed to

console.log("found the cookie acceptance cookie");

//OK, we have found the cookie that shows that the cookie banner has been accepted

//we dont need the timer any more

clearInterval(ourInterval);

// safe to show the divs

console.log("Calling the jQuery and postscribe async read and insertion of the ads");

// we can now use a combination of jQuery and postscribe to proxy to asynchronously modify

// each each of the placeholders we set using the function showDivs() which we edited at the top of this script

showDivs();

}

}

// This is where the main activity takes place!

// lets find out where our visitor is from. We put in a jQuery call to the geolocation API @ ipdata.co to get the users location data

// This returns a JSON formatted block ofdata based on the approximate location (by ipaddr) of the visitor Remember to add your API key!

// No, thats not a typo-----------------------------------------v - the closing ) is down at the bottom of the script

$.getJSON('https://api.ipdata.co?api-key=01234567890123456789',

// we set up a callback for the response

function(geodata) {

// dump the result out to the console log for debug - always handy!

// there is quite a bit of useful info there - but remember we are trying to meet GDPR!!

// You really should do nothing else with this data unless your visitor has specifically agreed to it.

console.log(geodata);

// now see if the recovered country code (held in geodata,country_code object) 0ccurs in the array of gdpr codes

// which we declared right at the top of the script

if (reqGDPR.includes(geodata.country_code)){

// Yes :: OK this is a GDPR situ!

// make a note to the console log that we have realised the visitor is from a GDPR country

console.log("GDPR visitor from : " + geodata.country_code);

// need do nothing else as the timer based cookie check function will run every five seconds to check

// if cookies policy has been accepted, and will not call the ads until that is confirmed

}else{

// Nope, looks like we are good... this visitor is not from a place where GDPR applies : go get those ads!

//we dont need the interval timer any more

clearInterval(ourInterval);

// we can now use a combination of jQuery and postscribe to proxy to asynchronously modify

// each each of the placeholders we set using the function showDivs() which we edited at the top of this script

showDivs();

}

}

);

</script>

First, edit the section about half way down :

$.getJSON('https://api.ipdata.co?api-key=01234567890123456789',

Replacing the highlighted section with your own API key.

Then take the third party script that was provided by twitter or whoever, and edit the code that you have just pasted, replacing the highlighted section between the second set of single quotes with the third party script that you want to include.

// add twitter widget

$(function() {

postscribe('#myPlaceHolder2','<a class="twitter-timeline" data-width="300" data-height="600" data-theme="dark" .... etc .... <\/script>');

});

Repeat for however many third party scripts you have.

IMPORTANT: you will need to escape the closing script tag in the third party code if there is one. eg: if the code you pasted from the third party site contains </script> you need to add a backslash so you end up with

<\/script>

Similarly, if the script you paste in has any single quotes in it, you will need to put a backslash before them.

That's it... that is all you need to do. each time you add a third party script or widget to your site, create a placeholder div, and copy the block of code above, editing the placeholder id and adding the third party script as described.

Your site will now check if the visitor comes from a GDPR region and if so, wait for them to agree too cookies before loading the third party scripts. If not, they will load up a few seconds after the page is loaded.

And now, the description of how it works...

Lets look at this segment first:

// set up a interval timer for five second intervals - this will repeatedly call the function pollCookieAgree that checks for the agreement cookie

// being written which shows that the visitor has agreed to the gdpr cookies terms or it is cancelled due to this

// not being a visitor from a gdpr country

var ourInterval = setInterval("pollCookieAgree()", 5000);

.

.

.

.

.

// interval based listener function - called until cancelled by ourInterval further up the script

function pollCookieAgree(){

// call the readCookie script and grab its returned value

var myCookie = readCookie("displayCookieNotice");

if (myCookie==="y"){

// yes - we have found the cookie that shows cookies have been agreed to

console.log("found the cookie acceptance cookie");

//OK, we have found the cookie that shows that the cookie banner has been accepted

//we dont need the timer any more

clearInterval(ourInterval);

// safe to show the divs

console.log("Calling the jQuery and postscribe async read and insertion of the ads");

// we can now use a combination of jQuery and postscribe to proxy to asynchronously modify

// each each of the placeholders we set using the function showDivs() which we edited at the top of this script

showDivs();

}

}

Basically we are creating a "listener" script. The initialization -

var ourInterval = setInterval("pollCookieAgree()", 5000);

Sets up a recurring timer to call the pollCookieAgree function once every five seconds. (This will execute forever until it is cancelled).

This in turn uses the generic cookie reading function readCoolkie(name) to check for the presence of a cookie called "displayCookieNotice". (This will have been written out by Blogspot once a visitor has agreed to the cookie banner terms.)

If it is not found, the function does nothing and waits until it is next called by the interval timer, or is cancelled externally.

If it does find the cookie however, It is safe to continue loading the third part scripts. It first cancels the interval timer as it is no longer needed, then after a log out to the console, calls our function showDivs().

function showDivs(){

// this is the bit you need to edit =============================================================================================

// (see blog post for details)

// add amazon search widget

$(function() {

postscribe('#myPlaceHolder1','<script type="text/javascript">amzn_assoc_ad_type ="responsive_search_widget";.... etc .... <\/script>');

});

// add twitter widget

$(function() {

postscribe('#myPlaceHolder2','<a class="twitter-timeline" data-width="300" data-height="600" data-theme="dark" .... etc .... <\/script>');

});

//etc...

}

This does all the hard work of re-inserting the deferred third party scripts using postscribe.

postscribe('#myPlaceHolder1','<script>.... etc .... <\/script>');

Calls the script source held between the second set of single quotes via postscribe, buffers it and does an async child append to the div target between the first pair of single quotes. Problems with things like scripts with document.write etc are taken care of. Simple as that :)

But...!!!

That is only half the story. If a visitor from a non-GDPR country arrived, the cookie checking script would just loop forever - Blogspot only writes that cookie when someone agrees to the GDPR banner.

We need a second line of attack...

This is where the ipdata API call comes in.

// array of country codes where GDPR cookie agreement is needed (source : https://www.hipaajournal.com/what-countries-are-affected-by-the-gdpr/)

// if other countries needing a cookie accept add the country code to this array

var reqGDPR = ["AT","BE","BG","HR","CY","CZ","DK","EE","FI","FR","DE","GR","HU","IE","IT","LV","LT","LU","MT","NL","PL","PT","RO","SK","SI","ES","SE","GB"];

.

.

.

.

// lets find out where our visitor is from. We put in a jQuery call to the geolocation API @ ipdata.co to get the users location data

// This returns a JSON formatted block ofdata based on the approximate location (by ipaddr) of the visitor Remember to add your API key!

// No, thats not a typo-----------------------------------------v - the closing ) is down at the bottom of the script

$.getJSON('https://api.ipdata.co?api-key=01234567890123456789',

// we set up a callback for the response

function(geodata) {

// dump the result out to the console log for debug - always handy!

// there is quite a bit of useful info there - but remember we are trying to meet GDPR!!

// You really should do nothing else with this data unless your visitor has specifically agreed to it.

console.log(geodata);

// now see if the recovered country code (held in geodata,country_code object) 0ccurs in the array of gdpr codes

// which we declared right at the top of the script

if (reqGDPR.includes(geodata.country_code)){

// Yes :: OK this is a GDPR situ!

// make a note to the console log that we have realised the visitor is from a GDPR country

console.log("GDPR visitor from : " + geodata.country_code);

// need do nothing else as the timer based cookie check function will run every five seconds to check

// if cookies policy has been accepted, and will not call the ads until that is confirmed

}else{

// Nope, looks like we are good... this visitor is not from a place where GDPR applies : go get those ads!

//we dont need the interval timer any more

clearInterval(ourInterval);

// we can now use a combination of jQuery and postscribe to proxy to asynchronously modify

// each each of the placeholders we set using the function showDivs() which we edited at the top of this script

showDivs();

}

}

);

The first thing we do is build an array of the two char country codes for every country that implements GDPR

var reqGDPR = ["AT","BE","BG","HR","CY","CZ","DK","EE","FI","FR","DE","GR","HU","IE","IT","LV","LT","LU","MT","NL","PL","PT","RO","SK","SI","ES","SE","GB"];

Next we use jQuery to get a JSON formatted object back from the ipdata API and set up a callback:

$.getJSON('https://api.ipdata.co?api-key=01234567890123456789',

// we set up a callback for the response

function(geodata) {...}

(See

https://g7nbp.blogspot.com/2018/04/using-jquery-and-ipdata-api-to-serve.html for more info).

if (reqGDPR.includes(geodata.country_code)){....}

we compare the returned geodata.country_code to our array of GDPR countries, and if there is a match, we do nothing... because our interval timed script will be along shortly to keep checking for the agreement cookie.

If we dont find a match then we realise that this is not a GDPR country anyway, so cancel the timer and call showDivs() to begin writing out the deferred thrid party scripts to the placeholder divs...

Jobsagoodun!

So, we have catered for both GDPR visitors who may or may not have agreed to cookies, and to non GDPR visitors.

I hope that makes sense, and that you can use a variant of the above to solve any third party script issues you may have.